Deduplicating Search Results

June 08, 2014 at 12:34 PM | categories: XQuery, MarkLogic | View CommentsSo you're writing a MarkLogic application, and this question comes up: How do we deduplicate search results?

In one sense MarkLogic will never return duplicates from a database lookup.

A single XPath, cts:search, or search:search expression will always

return unique nodes, as defined by the

XPath is operator.

But your application might have its own, content-dependent definition of a duplicate. This might depend on just a subset of the XML content. For example you might be storing news articles pulled from different media sources: newspapers, magazines, blogs, etc. Often the same news story will appear in different sources, and sometimes the text will be identical or extremely close. When a user searches for the hot story of the day you want to have all the variations available, but the search results should roll them up together on the page. You can see something like this if you search Google News.

One good strategy is to avoid duplicates entirely,

by ensuring that your documents have meaningful URIs.

Construct the URI using the same information

that determines whether or not a document is a duplicate.

This way if content arrives that duplicates existing content,

it turns out to have the same URI.

Then you are free to update the database with the latest copy,

ignore it, throw an error, or call for help.

If every news story has a dateline

and an author byline, we could construct document URIs

based on the date, location, and byline:

something like /news/2014/05/30/washington/jones.

But maybe that isn't a very good solution for our news application. Remember that we want to search for articles, but we only want one article per story. So we have to store all the duplicate articles, and we need query-time flexibility to display just one article per story.

Clearly we will need to generate a story-id for each story, one that remains the same no matter how different articles are presented. That might use a mechanism similar to the URI computation above, except that we would put the result in an element and it would not be unique. We could use the same facts we were going to use in the document URI:

<story-id>2014-05-30|washington|jones</story-id>

Once we have our application set up to generate story-id elements,

we could try a brute-force approach.

Search the database, then walk through the search results.

Extract each story-id value and check it

against a list of previously-seen story-id values.

We could use a map for that.

If the story-id has already been seen, ignore it.

Otherwise put the story-id in the map and return the article.

(

let $search-results := search:search(...)

let $seen := map:map()

for $article in $search-results

let $story-id as xs:string := $article/story-id

where not(map:contains($seen, $story-id))

return (

map:put($seen, $story-id, $story-id),

$article))[$start to $stop]

But there are problems with this approach. Pagination is tricky because we don't know how many duplicates there will be. So we have to ask the database for a lot of results, maybe all of them at once, and then filter and paginate in user code. This gets more and more expensive as the result size increases, and trickier to manage as the user paginates through the results. If a search matches a million articles, we might have to retrieve and check all the matches before we can display any results. That's going to be slow, and probably limited by I/O speeds. Nowadays we could throw SSD at it, but even SSD has limits.

Another problem with the brute-force approach is that facets generated by the database will not match the deduplicated results. You might have a facet on author that shows 1000, but deduplicated filters out all but 100 of the articles.

So let's look at another approach. Instead of deduplicating after we search,

let's deduplicate before we search. That might sound crazy,

but we have a couple of powerful tools that make it possible:

cts:value-co-occurrences

and cts:document-query.

The idea is to deduplicate

based on the co-occurrence of story-id and document URI,

without retrieving any documents.

Then we query the database again,

this time fetching only the non-duplicate documents

that we want to return.

Each article is stored as a document with a unique document URI.

We enable the

document URI lexicon

and we also create an element-range index on the element named story-id.

As described above, we construct a story-id

for every article as it arrives and add it to the XML.

This story-id is our deduplication key: it uniquely identifies a story,

and if multiple articles might have the same story-id value

then they are treated as duplicates.

A deduplication key is application-specific, and might be anything.

An application might even have multiple deduplication keys

for different query types.

However it's essential to have a deduplication key for every document

that you want to query, even if only some documents will have duplicates.

The technique we're going to use will only return documents

that have a deduplication key.

An article with no story-id simply won't show up in the co-occurrence

results, so it won't show up in search results either.

Here's some code to illustrate the idea. Start with $query-original,

which is the original user query as a cts:query item.

We might generate that using

search:parse

or perhaps the xqysp library.

(: For each unique story-id there may be multiple article URIs.

: This implementation always uses the first one.

:)

let $query-dedup := cts:document-query(

let $m := cts:values-co-occurrences(

cts:element-reference(

xs:QName('story-id'),

'collation=http://marklogic.com/collation/codepoint'),

cts:uri-reference(),

'map')

for $key in map:keys($m)

return map:get($m, $key)[1])

(: The document-query alone would match the right articles,

: but there would be no relevance ranking.

: Using both queries eliminates duplicates and preserves ranking.

:)

let $query-full := cts:and-query(($query-original, $query-dedup))

...

Now we can use $query-full with any API that uses cts:query items,

such as cts:search. In order to match, an article will have to match

$query-original and it will have to have one of the URIs

that we selected from the co-occurrence map.

Instead of calling cts:search directly, we might want to use

search:resolve.

That function expects a cts:query XML element, not a cts:query item.

So we need a little extra code to turn the cts:query item

into an XML document and then extract its root element:

...

return search:resolve(

document { $query-full }/*,

$search-options,

$pagination-start,

$pagination-size)

Many search applications also provide facets. You can ask search:resolve

for facets by providing the right search options,

or you can call cts:values yourself.

Note that since facets are not relevance-ranked,

it might be a little faster to use $query-dedup instead of $query-full.

Speaking of performance, how fast is this? In my testing it added

an O(n) component, linear with the number of keys in the

cts:values-co-occurrences map. With a small map the overhead is low,

and deduplicating 10,000 items only adds a few tens of milliseconds.

But with hundreds of thousands of map items the profiler

shows more and more time spent in the XQuery FLWOR expression

that extracts the first document URI from each map item.

let $m := cts:values-co-occurrences(

cts:element-reference(

xs:QName('story-id'),

'collation=http://marklogic.com/collation/codepoint'),

cts:uri-reference(),

'map')

for $key in map:keys($m)

return map:get($m, $key)[1])

We can speed that up a little bit by trading the FLWOR for function mapping.

declare function local:get-first(

$m as map:map,

$key as xs:string)

as xs:string

{

map:get($m, $key)[1]

};

let $m := cts:values-co-occurrences(

cts:element-reference(

xs:QName('story-id'),

'collation=http://marklogic.com/collation/codepoint'),

cts:uri-reference(),

'map')

return local:get-first($m, map:keys($m))

However this is a minor optimization, and with large maps it will still be expensive to extract the non-duplicate URIs. It's both faster and more robust than the brute-force approach, but not as fast as native search.

Pragmatically, I would try to handle these performance characteristics in the application. Turn deduplication off by default, and only enable it as an option when a search returns fewer than 100,000 results. This would control the performance impact of the feature, providing its benefits without compromising overall performance.

It's also tempting to think about product enhancements.

We could avoid some of this work if we could find a way

to retrieve only the part of the map needed for the current search page,

but this is not feasible with the current implementation

of cts:values-co-occurrences. That function would have to return

the co-occurrence map sorted by the score of each story-id.

That's tricky because normally scores are calculated for documents,

in this case articles.

One way to speed this up without changing MarkLogic Server

could be to move some of the work into the forests.

MarkLogic Server supports

User-Defined Functions,

which are C++ functions that run directly on range indexes.

I haven't tried this approach myself, but in theory you could write a UDF

that would deduplicate based on the story-id and URI co-occurrence.

Then you could call this function with

cts:aggregate.

This would work best if you could partition your forests

using the story-id value, so that any the duplicate values articles

are guaranteed to be in the same forest.

Used carefully this approach could be much faster,

possibly allowing fast deduplication with millions of URIs.

For more on that idea, see the documentation for Tiered Storage and the UDF plugin tutorial. If you try it, please let me know how it works out.

Introduction to Multi-Statement Transactions

June 21, 2013 at 12:34 PM | categories: XQuery, MarkLogic | View CommentsIf you are an old hand with MarkLogic, you are used to writing

update queries with implicit commits. Sometimes this

means restructuring your code so that everything can happen in one commit,

with no conflicting updates. In extreme cases you might

even decide to run multiple transactions from one query,

using xdmp:invoke or semicolons.

Historically this meant giving up atomicity.

Multi-statement transactions, introduced in MarkLogic 6, promise a third way. We can write a transaction that spans multiple statements, with an explicit commit or rollback.

For most updates it's probably best to stick with the old ways and use implicit commits. But let's look at a concrete example of a time when multi-statement transactions are the right tool for the job.

Suppose you are using DLS (Document Library Services) to manage your document versioning. But you have a special case where you want to insert two discrete versions of the same document atomically. That may sound odd, but I ran into that exact problem recently.

First we need to discover that there is a problem. Let's bootstrap the a test document with DLS.

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

try {

dls:document-delete('test', false(), false()) }

catch ($ex) {

if ($ex/error:code ne 'DLS-UNMANAGED') then xdmp:rethrow()

else if (empty(doc('test'))) then ()

else xdmp:document-delete('test') }

;

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

dls:document-insert-and-manage('test', false(), <x id="x1"/>)

Now let's write some XQuery to insert two versions in one update, and see what happens.

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

dls:document-checkout-update-checkin(

'test', <x id="x2"/>, "version two", true()),

dls:document-checkout-update-checkin(

'test', <x id="x3"/>, "version three", true())

This throws an XDMP-CONFLICTINGUPDATES error, because these calls to DLS

end up trying to update the same nodes twice in the same transaction.

In implicit commit mode, aka "auto" mode, this is difficult to avoid.

We could ask MarkLogic to extend DLS with a new function

designed for this situation. But that is a long-term solution,

and we need to move on with this implementation.

So what can we do? We might read up on xdmp:invoke, xdmp:eval, etc.

If we are careful, we can write a top-level read-only query

that invokes one or more update transactions.

(: Entry point - must be a read-only query. :)

xdmp:invoke(

'update.xqy',

(xs:QName('URI'), 'test',

xs:QName('NEW'), <x id="x2"/>,

xs:QName('NOTE'), "version two")),

xdmp:invoke(

'update.xqy',

(xs:QName('URI'), 'test',

xs:QName('NEW'), <x id="x3"/>,

xs:QName('NOTE'), "version three"))

This invokes a module called update.xqy, which would look like this:

(: update.xqy :)

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

declare variable $NEW as node() external ;

declare variable $NOTE as xs:string external ;

declare variable $URI as xs:string external ;

dls:document-checkout-update-checkin(

$URI, $NEW, $NOTE, true())

This works - at least, it doesn't throw XDMP-CONFLICTINGUPDATES.

But we have lost atomicity. Each of the two updates runs

as a different transaction. This opens up a potential race

condition, where a second query updates the document

in between our two transactions. That could break our application.

There are ways around this, but they get complicated quickly. They are also difficult to test, so we can never be confident that we have plugged all the potential holes in our process. It would be much more convenient if we could run multiple statements inside one transaction, with each statement able to see the database state of the previous statements.

We can do exactly that using a multi-statement transaction. Let's get our feet wet by looking at a very simple MST.

declare option xdmp:transaction-mode "update";

xdmp:document-insert('temp', <one/>)

;

xdmp:document-insert('temp', <two/>),

xdmp:commit()

There are three important points to this query.

1. The option xdmp:transaction-mode="update"

begins a multi-statment transaction.

1. The semicolon after the first xdmp:document-insert

ends that statement and begins another.

1. The xdmp:commit ends the multi-statement transaction

by commiting all updates to the database.

This runs without error, and we can verify that doc('temp')

contains <two/> after it runs.

But how can we prove that all this takes place in a single transaction?

Let's decorate the query with a few more function calls.

declare option xdmp:transaction-mode "update";

xdmp:get-transaction-mode(),

xdmp:transaction(),

doc('temp')/*,

xdmp:document-insert('temp', <one/>)

;

xdmp:get-transaction-mode(),

xdmp:transaction(),

doc('temp')/*,

xdmp:document-insert('temp', <two/>),

xdmp:commit()

This time we return some extra information within each statement: the transaction mode, the transaction id, and the contents of the test doc. The transaction ids will be different every time, but here is one example.

update

17378667561611037626

<two/>

update

17378667561611037626

<one/>

So the document test started out with the old node <two/>,

but after the first statement it changed to <one/>.

Both statements see the same transaction mode and id.

Try changing the xdmp:transaction-mode declaration to auto, the default.

You should see the mode change to auto, and two different transaction-ids.

This tells us that in update mode we have a multi-statement transaction,

and in auto mode we have a non-atomic sequence of two different transactions.

Before MarkLogic 6, all update statements ran in auto mode.

Now let's apply what we've learned about MST to the original problem: inserting two different versions of a managed document in a single transaction.

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

declare option xdmp:transaction-mode "update";

dls:document-checkout-update-checkin(

'test', <x id="x2"/>, "version two", true())

;

import module namespace dls="http://marklogic.com/xdmp/dls"

at "/MarkLogic/dls.xqy";

dls:document-checkout-update-checkin(

'test', <x id="x3"/>, "version three", true()),

xdmp:commit()

As above, this code uses three important features:

1. Set xdmp:transaction-mode="update" to begin the MST.

1. Use semicolons to end one statement and begin another.

1. Use xdmp:commit to end the MST and commit all updates.

To abort a multi-statement transaction, use xdmp:rollback.

So now you have a new tool for situations where implicit commit

is a little too awkward. Try not to overdo it, though.

In most situations, the default xdmp:transaction-mode="auto"

is still the best path.

External Variables (Code Review, Part II)

September 28, 2012 at 12:34 PM | categories: XQuery, MarkLogic | View CommentsRemember when I talked about XQuery Code Review?

The other day I was forwarding that link to a client,

and noticed that I forgot to mention external variables.

I talked about xdmp:eval and xdmp:value

in the section titled Look_for_injection_paths,

and mentioned that it's usually better to use xdmp:invoke or xdmp:unpath,

which are less vulnerable to injection attacks.

But it can be convenient or even necessary to evaluate dynamic XQuery.

That's what xdmp:eval and xdmp:value are there for, after all.

I've even written tools like Presta

to help you.

Used properly, dynamic queries can be made safe.

The trick is to never let user data directly into your dynamic queries.

Whenever you see xdmp:eval or xdmp:value in XQuery,

ask yourself "Where did this query comes from?"

If any part of it came from user input, flag it for a rewrite.

(: WRONG - This code is vulnerable to an injection attack! :)

xdmp:eval(

concat('doc("', xdmp:get-request-field('uri'), '")'))

Actually there are at least two bugs in this code.

There is a functional problem: what happens if the uri request field

is fubar-"baz"? You might not expect a uri to include a quote,

and maybe that will never legitimately happen in your application.

But if that request-field does arrive, xdmp:value will throw an error:

XDMP-UNEXPECTED: (err:XPST0003) Unexpected token syntax error

That's because you haven't properly escaped the uri in the dynamic XQuery.

And you could escape it. You could even write a function to do that for you.

But if you miss any of the various characters that need escaping,

XDMP-UNEXPECTED will be there, waiting for you.

So far we've only talked about innocent mistakes. But what if someone out there

is actively hostile? Let's say it's me. If I know that your web service

expects a uri request-field, I might guess that your code looks something like

the code above, and try an injection attack.

After a little trial and error, I might find that sending

uri=x"),cts:uris(),(" returns a list of all the documents in your database,

whether you want me to see them or not. Then I can send something like

uri=x"),xdmp:document-delete("fubar. If that document exists,

and security isn't tight... it's gone. Or maybe I will decide to try

xdmp:forest-clear instead.

In SQL we use bind variables to solve both of these problems. Any user input binds to a variable inside the SQL, and the database driver takes care of escaping for us. We no longer have to worry about obscure syntax errors or injection attacks, as long as we remember to use variable for all externally-supplied parameters. In XQuery these are known as external variables.

(: Always use external variables for user-supplied data. :)

xdmp:eval(

'declare variable $URI as xs:string external ;

doc($URI)',

(xs:QName('URI'), xdmp:get-request-field('uri')))

The syntax is a little odd: that second parameter is a sequence of alternating QName and value. Because XQuery doesn't support nested sequences, this means you can't naively bind a sequence to a value. Instead you can pass in XML or a map, or use a convention like comma-separated values (CSV).

(: Using XML to bind a sequence to an external variable. :)

xdmp:eval(

'declare variable $URI-LIST as element(uri-list) external ;

doc($URI-LIST/uri)',

(xs:QName('URI-LIST'),

element uri-list {

for $uri in xdmp:get-request-field('uri')

return element uri { $uri } }))

Even though these examples all use pure XQuery, this code review principle

also applies to XCC code. If you see a Java or .NET program using AdHocQuery,

check to make sure that all user input binds to variables.

Remember, the best time to fix a potential security problem is before the code goes live.

rsyslog and MarkLogic

May 17, 2012 at 06:00 PM | categories: MarkLogic, Linux | View CommentsYou probably know that MarkLogic Server logs important events

to the ErrorLog.txt file. By default it logs events at INFO or higher,

but many development and staging environments change the file-log-level

to DEBUG. These log levels are also available to the xdmp:log function,

and some of your XQuery code might use that for printf-style debugging.

You might even know that MarkLogic also sends important events

to the operating system. On linux this means syslog, and important events

are those at NOTICE and higher by default.

But are you monitoring these events?

How can you set up your MarkLogic deployment so that it will automatically alert you to errors, warnings, or other important events?

Most linux deployments now use rsyslog as their system logging facility.

The full documentation is available,

but this brief tutorial will show you how to set up email alerts for MarkLogic

using rsyslog version 4.2.6.

All configuration happens in /etc/rsyslog.conf.

Here is a sample of what we need for email alerts.

First, at the top of the file you should see several ModLoad declarations.

Check for ommail and add it if needed.

$ModLoad ommail.so # email support

Next, add a stanza for MarkLogic somewhere after the ModLoad declaration.

# MarkLogic

$template MarkLogicSubject,"Problem with MarkLogic on %hostname%"

$template MarkLogicBody,"rsyslog message from MarkLogic:\r\n[%timestamp%] %app-name% %pri-text%:%msg%"

$ActionMailSMTPServer 127.0.0.1

$ActionMailFrom your-address@your-domain

$ActionMailTo your-address@your-domain

$ActionMailSubject MarkLogicSubject

#$ActionExecOnlyOnceEveryInterval 3600

daemon.notice :ommail:;MarkLogicBody

Be sure to replace both instances of your-address@your-domain

with an appropriate value. The ActionMailSMTPServer must be smart enough

to deliver email to that address. I used a default sendmail configuration

on the local host, but you might choose to connect to a different host.

Note that I have commented out the ActionExecOnlyOnceEveryInterval option.

The author of rsyslog, Rainer Gerhards,

recommends setting this value to a reasonably high number of seconds

so that your email inbox is not flooded with messages.

However, the rsyslog documentation states that excess messages

are discarded, and I did not want to lose any important messages.

What I would really like to do is buffer messages for N seconds at a time,

and merge them together in one email.

But while rsyslog has many features, and does offer buffering,

it does not seem to know how to combine consecutive messages

into a single email.

Getting back to what rsyslog can do,

you can customize the subject and body of the mail message.

With the configuration above, a restart of the server

might send you an email like this one:

Subject: Problem with MarkLogic on myhostname.mydomain

rsyslog message from MarkLogic:

[May 17 23:58:36] MarkLogic daemon.notice<29>: Starting MarkLogic Server 5.0-3 i686 in /opt/MarkLogic with data in /var/opt/MarkLogic

When making any rsyslog changes, be sure to restart the service:

sudo service rsyslog restart

At the same time, check your system log for any errors or typos.

This is usually /var/log/messages or /var/log/syslog.

The full documentation for template substitution properties

is online.

You can also read about a wealth of other options available in rsyslog.

Directory Assistance

March 19, 2012 at 12:34 PM | categories: MarkLogic | View CommentsFor a long time now, MarkLogic Server has implemented two distinct features that are both called "directories". This causes confusion, especially since one of these features scales well and the other often causes scalability problems. Let's try to distinguish between these two features, and talk about why they both exist.

Directories were first introduced to accommodate WebDAV.

Since WebDAV clients treat the database as if it were a filesystem,

they expect document URIs with the solidus, or /,

to imply directory structure. That's one feature called "directories":

if you insert a document with the URI /a/b/c.xml, you can call

xdmp:directory('/a/b/', '1') to select that document -

and any other document with the same URI prefix. These URI prefixes

are indexed in much the same way that document URIs and collection URIs

are indexed, so queries are "searchable" and scale well.

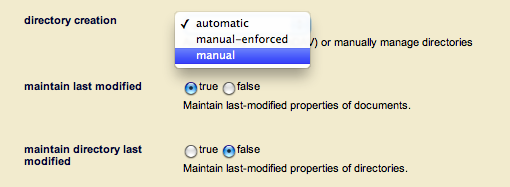

This "implied directory structure" works with any database configuration.

You do not need directory-creation=automatic

to use the cts:directory-query and xdmp:directory functions.

This returns a query plan in XML:

But WebDAV clients expect more than just directory listings. They also want to lock documents and directories. It is easy to understand document locking: the idea here is that a WebDAV-aware editor might lock a document, copy it to the local filesystem for editing, and copy it back to the server when the editing session ends. It may be less clear that a WebDAV client sometimes needs to lock directories, but it does.

Directory locking is implemented using special directory fragments. There are no documents associated with these properties, so they are sometimes called "naked properties." Here is an example.

Once this update has committed to the database, we can query the directory fragment.

Once you have a directory fragment, you have something that the database

can lock for WebDAV clients. It's rare for anything else

to use this behavior, but xdmp:lock-acquire is available for custom

content management applications.

Earlier I mentioned that there are two kinds of "directories", one that scales well and one that sometimes causes problems. I wrote that queries based on directory URIs scale well, so you might guess that directory fragments sometimes cause problems. That's correct, and it results from a database feature called "automatic directory creation".

When automatic directory creation is enabled - as it is by default -

the database will ensure that directory fragments exist for every

implied directory in the URI for every new or updated document.

The document URI /a/b/c.xml implies a directory fragment

for /, /a/, and /a/b/. So the database will ensure that these exist

whenever a request updates /a/b/c.xml.

So what happens when one request updates /a/b/c.xml

and another request updates /a/b/d.xml?

Both requests try to ensure that there are directory fragments

for /, /a/, and /a/b/. This causes lock contention.

The same problem shows up if another request is updating /fubar.xml,

because both queries look for the / directory fragment.

The situation gets worse as concurrency increases.

It gets even worse if "maintain directory last-modified" is enabled,

because the directory fragments have to be updated too.

But happily that feature is not enabled by default.

The solution to this problem is simple. In my experience

at least 80% of MarkLogic Server customers do not use WebDAV,

so they do not need automatic directory creation. Instead,

they can set directory creation to "manual".

Do this whenever you create a new database,

or script it using admin:database-set-directory-creation.

If you do use WebDAV, try to limit its scope. Perhaps you can get by

with a limited number of predefined WebDAV directories,

which you create manually using xdmp:directory-create

as part of your application deployment.

Or perhaps you only use WebDAV for your XQuery modules,

which only contains a few hundred or at most a few thousand documents.

In that case you can use automatic directory creation without a problem.

Generally speaking, really large databases don't use WebDAV anyway. "Big content" databases, with hundreds of millions or billions of documents, tend to be much to large for WebDAV to be useful. For smaller databases where WebDAV is useful, automatic directory creation is fine.

Sometimes it is useful to set "directory-creation" to "manual-enforced".

With this configuration you will see an XDMP-PARENTDIR error

whenever your code tries to insert a document

with an implied directory structure

that does not have corresponding directory fragments.

But this feature is rarely used.

To sum up, directory URIs are highly scalable and very useful,

and are always indexed. Your code can call xdmp:directory

with any database settings.

The default "automatic directory creation" feature creates directory fragments,

which can be a bottleneck for large databases.

Most applications are better off with "directory-creation" set to "manual".